If you are looking for a link to the June 2003 GAE workshop pages, please go here.

The importance of a Grid Analysis Environment (GAE) for the LHC experiments is hard to overestimate. Whilst the utility and need for Grids has been proven in the production environment (by the LHC experiments as a whole), their significance and critical role in the area of physics analysis has yet to be realised.

The work on GAE at Caltech is a natural progression from our completed projects GIOD and ALDAP, our recently funded project CAIGEE, our collaboration in PPDG, GriPhyN, iVDGL and more recently the GECSR project.

The development of the GAE is the acid test of the utility of Grid systems

for science. The GAE will be used by a large, diverse community. It will

need to support hundreds, even thousands, of analysis tasks with widely

varying requirements. It will need to employ priority schemes, and robust

authentication and security mechanisms. And, most challenging, it will

need to operate well in what we expect to be a severely resource limited

global computing system. So we believe that the GAE is the key to success

or failure of the Grid for physics, since it is where

the critical physics analysis gets done, where the Grid end-to-end services are

exposed to a very demanding clientelle, and where the

physicists themselves

have to learn how to collaborate at large distances on challenging

analysis topics.

A list of recent publications related to GAE can be found here.

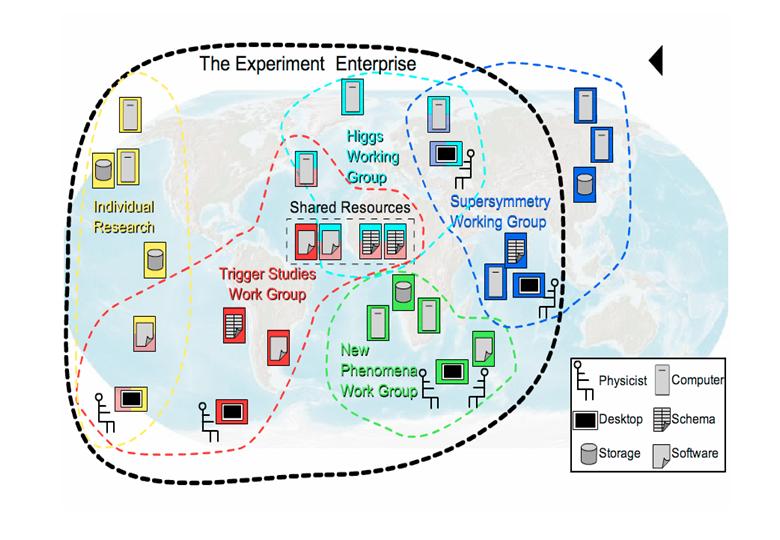

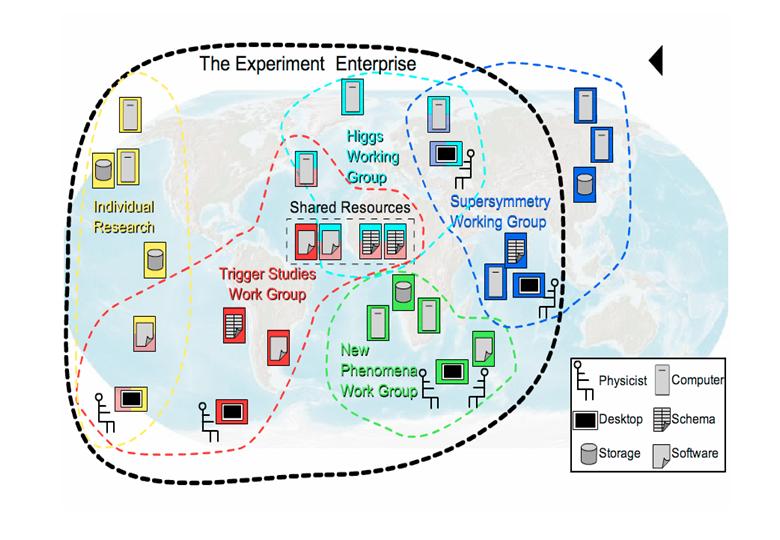

In the following Figure we show a schematic representation (author: Lothar Bauerdick, FNAL) of how the dynamic process of supporting analysis tasks in a worldwide community of physicists might look.

The diagram shows a "snapshot" in time of analysis activities in the experiment. Groups of individuals, separated by large geographic distances, are working on specific analysis topics, Supersymmetry, for example. Resources in the Grid system are being shared between the active groups. The dashed line boundaries enclosing each of groups, move and change shape and size as the composition or requirements of the groups changes.

In the CAIGEE project, we have proposed a candidate software architecture to support analysis in the Grid. The architecture is based on the use of Web Services, or Portals, which mediate the access between the analysis clients and the Grid resources. The most recent version of the CAIGEE architecture is shown below.

The important features of the architecture are its support for a wide variety of clients (both platforms and analysis software), and its use of Web Services to optimise the user's view and use of the Grid resources.

Web services are computing services offered via the Web. In a typical Web services scenario, an end-user application sends a request to a service at a given URL using the SOAP protocol over HTTP. The service receives the request, processes it, and returns a response. An often-cited example of a Web service from the business world is that of a stock quote service, in which the request asks for the current price of a specified stock, and the response gives the stock price. This is one of the simplest forms of a Web service in that the request is satisfied almost immediately, with the request and response being parts of the same method call.

Web services and consumers of Web services are typically different organizations with diverse software and hardware platforms, making Web services a potentially very viable solution to providing distributed computing connectivity. An institution or organization can be the provider of Web services and also the consumer of other Web services. For example, a group of researchers could be in the consumer role when it uses a Web service to read and analyze data from other service providers and in the provider role when it supplies other researchers with the final product of the analysis.

At Caltech, we have been working on developing, and proving the feasibility of, Web Services for physics analysis data access. Different types of data ranging from detailed event objects stored in Objectivity ORCA databases, as well as Tag objects stored in Objectivity Tag databases have been converted into prototypical Web Services. From Objectivity ORCA databases, we developed a set of tools that allowed lightweight access to detailed event objects through Web Services. These Web Services provided access to data ranging in granularity from the Federation metadata to the event hits and tracks. Accessed using this method, the data could then be used by a variety of tools and software programs. In 2002 we successfully provided distributed access, via a Web Service, to FNAL’s JetMet Ntuple files produced from ORCA Trigger analysis. This Web Service was implemented using both a SQLServer database backend running under Windows, and using an Oracle9i database backend running under Linux. The user's view of the interface was identical, so demonstrating the ease with which we were able to hide the hetorogeneity and details of the data persistence mechanism.

The Clarens project started in the spring of 2001 as a remote analysis server and grew into a Grid-enabled web services layer, with client and server functionality to support remote analysis by end-user physicists. The project web page with mailing lists, source archives (CVS) and bug tracking system is hosted at clarens.sourceforge.net. Clarens is currently deployed at CMS sites in the US, at CERN, as well as Pakistan.

The server architecture was changed from a compiled CGI executable to an interpreted Python framework running inside the Apache http server, improving transaction throughput by a factor of ten. A Public Key Infrastructure (PKI) security implementation was developed to authenticate clients using certificates created by Certificate Authorities (CAs). All client/server communication still takes place over commodity http/https protocols with authentication done at the application level.

Authorization of web service requests is achieved using a hierarchical system of access control lists for users and groups forming part of a so-called Virtual Organization (VO). As a side-effect, Clarens offers a distributed VO management system with administration delegation away from a central all-powerful administrator.

Server-side applications made available through Clarens include the obligatory file access methods, proxy certificate escrow, access to RDBMS data access through SOCATS (see below), SDSC Storage Resource Broker (SRB) access, VO administration and shell command execution. Users on the Clarens-enabled servers are able to deploy their own web services without system administrator involvement. All method documentation and their APIs are discoverable through a standard interface. Access to web service methods is controlled individually through the ACL system mentioned above.

The services described above are available from within Python scripts, C++, as well as standalone applications and web browser-based applets using Java. A Root-based client was used to demonstrate distributed analysis of CMS JetMET data at the Supercomputing 2002 conference in Baltimore, MD. Clarens was also selected to be part of the CMS first data challenge (DC1) in 2004.

We have begun development of a general purpose tool to deliver large SQL result sets in a binary optimized form. This project is called SOCATS. SOCATS is an acronym for STL Optimized Caching and Transport System. The main purpose of SOCATS is to deliver relational result sets to C++ clients in binary optimized STL vectors and maps. The data returned from the SOCATS server to the client will be described through standard web service WSDL, but the data itself will be delivery in binary form. This will save the overhead of parsing large amounts of XML tags for large datasets. It will also reduce latency problems for WAN environments, in that large batches of rows which efficiently fill the network pipe will be transferred together. We intend to utilize the Clarens as our rpc layer for this product.

The GroupMan application was developed in response to a need for more user-friendly administration of current-generation LDAP-based virtual organizations.

GroupMan can be used to populate the LDAP server with the required data structures and certificates downloaded from Certificate Authorities (CAs). Certificates may also be imported from certificate files in the case of CAs that do not offer certificate downloads. These certificates can then be used to create and manage groups of users using a platform-independent graphical user interface written in Python.

The VO data is stored in such a way that it can be extracted using standard Grid-based tools to produce so-called {\em gridmap} files used by the Globus toolkit. These files map host system usernames to individuals or systems identified by their certificates, thereby providing a coarse-grained authorization mechanism.

This GAE client, based on JAS, and interfacing to Clarens, has been developed in collaboration with computer scientists at NUST, Islamabad. The picture shows an example histogram as it is displayed on the PDA.

We have constructed a four-screen desktop analysis setup that operates from a single server, using a single graphics card. The 4-way graphics card used allows an affordable setup to be built that offers enough screen space and pixels for most or all of:

Our prototype setup works on a desktop with four 20" displays for a cost of about $6-7 k. One can imagine variations where this works in small and large meeting rooms, by using different displays. The cost of such a setup is certain to drop substantially in the future.

Our Web Services are being catalogued at the Wooster UDDI Server (contact julian@cacr.caltech.edu if you'd like access to this server).

Example "Tag" Web Service (SQL Server): This Web service provides access to a small database of approximately 180,000 events, stored in an SQLServer database at Caltech. Web Service methods include those to fetch the total run and event count, issue arbitrary queries on the databases, and receive Tag event objects as bin-64 encoded data streams. Server technology is Microsoft .NET.

Example "Tag" Web Service (Oracle9i): This service provides identical methods to the one above, but here the data are hosted in an Oracle9i database located at CERN.

Example nTuple Web Service: This service provides access to a large (~ 14GBytes) repository of JETMET data from the CMS experiment (the original PAW nTuple data were obtained from the CMS JETMET team at FermiLab). The data are stored in a SQLServer database. The largest table has 132,000,000 rows. Server technology is Microsoft .NET.

Information on GAE Middleware (SOCATS work) (by Eric Aslakson)

PPDG Report from the Caltech Group for March-June 2002.

Visit of Tony Johnson, Joseph Perl, Max Turri, Mark Donszelmann (SLAC) to discuss GAE and JAS

Measurements of 3ware RAID array server performance.

iGrid2002 Demonstration (September 2002)

SuperComputing 2002 Demonstration (November 2002)

We broke the Internet2 Land Speed record while at Supercomputing 2002. Article in WIRED.

The NSF-funded "CAIGEE" Project (Caltech, UCSD,UCR,UCD)

Overview of Current Work

CMS Grid Integration Page: http://cmsdoc.cern.ch/cms/ccs/grid/

California Tier2 Prototype (Background and History)

Caltech Tier2 Hardware Details (October 2002)

Overview and Status - Presentation at the CMS ATLAS Review in Berkeley, Jan 2003

Presentations to Mary Anne Scott (DoE/MICS Program Manager), May 2002

CAIGEE: This material is based upon work supported by the National Science Foundation under Grant No. 0218937. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.